This Q&A is part of DHI’s ‘Modelling in practice’ series, exploring practical approaches to efficient and accurate modelling workflows.

—————————————–

Managing large datasets and generating hundreds of plots is a common challenge in environmental and water quality modelling. In this Q&A, Theresa Chu, Senior Maritime Engineer at Mott MacDonald, shares her experience streamlining post-processing workflows, reducing errors and saving time on a recent hydrodynamic modelling project. She used Python tools, including packages designed to work with MIKE Powered by DHI software, to handle complex model files. These tools supported tasks across the workflow, from creating input data and modifying output files to extracting results and quickly visualising outputs.

Q: What challenge were you facing in your project?

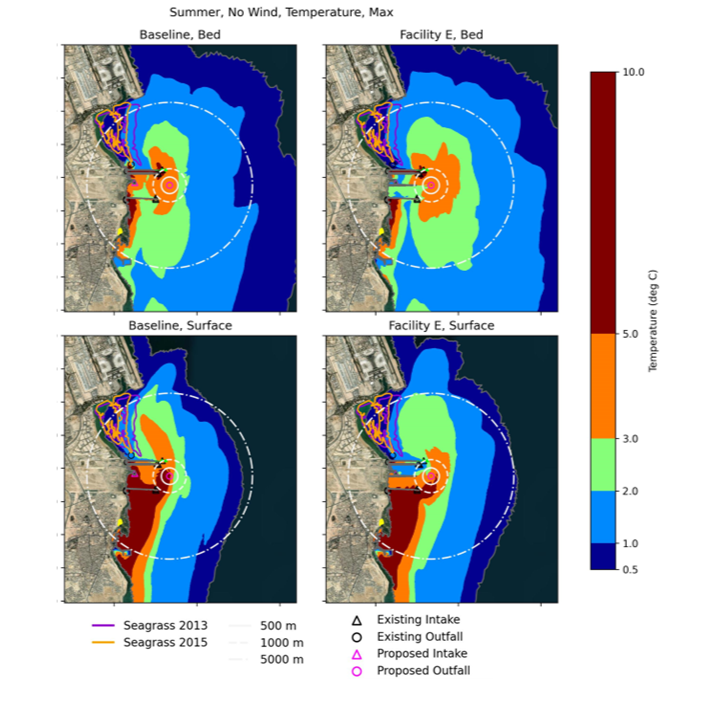

I was working on a hydrodynamic model in MIKE 3 that included temperature and salinity. Initially, I reviewed results using Plot Composer in MIKE Zero, the standard graphical interface, which served as a quick initial layout for reported results. With two different parameters, five layers of results and comparisons of the existing and proposed layout, however, creating each plot individually amounted to many hours of work. That’s when I turned to scripting.

Q: How did scripting help with post-processing?

Using Python tools (such as MIKE IO, in my experience) to read result files, calculate statistics and differences between proposed and existing layouts, and plot results at the required scale with a basemap led to significant time savings (and sanity). Programming also helped reduce human error. In a graphical interface, it’s easy to select the wrong scenario when comparing results, but a script ensures the correct files are always matched. For creating input boundary conditions, rather than manually copying data from a spreadsheet into a dfs0 file – which can introduce errors – Python tools can convert between Excel and dfs0 time series formats.

Q: Did you need a programming background to do this?

Not necessarily. I started small, building scripts gradually and testing each step. Documentation and examples were helpful in understanding the process. The effort to write the script is an investment, but it has proven its value.

Q: What broader benefits did you see from this approach?

Beyond time savings, scripting improved consistency and data integrity. It also gave us flexibility to adapt quickly when project requirements changed. And it’s not just about this one project – each time we build a script, we’re creating something reusable that can benefit future work.

Q: Any final thoughts on the role of automation in modelling?

Automation is becoming an important part of modern modelling workflows. It reduces errors, improves reproducibility and frees up time for more meaningful analysis. As tools and workflows continue to evolve, there will be further opportunities to streamline and enhance how we work.

—————————————–

A practical resource for modellers

Theresa’s experience is a great example of how practical automation can transform modelling workflows, making them more efficient, accurate and adaptable. Her use of scripting tools like MIKE IO shows how even small steps toward automation can deliver big returns in complex modelling projects.

If you’re interested in exploring similar approaches, our on-demand webinar, ‘MIKE IO – An introduction to manipulating MIKE modelling data files with Python’, offers a helpful starting point.